Landsat Data Enriches Google Earth

NASA Technology

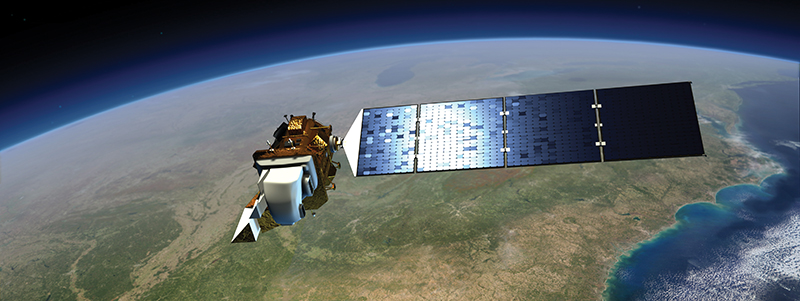

When NASA launched the Earth Resources Technology Satellite, later known as Landsat, in July 1972, the first spacecraft dedicated to monitoring Earth’s surface carried two imaging instruments—a camera and an experimental multispectral scanner (MSS) that recorded data in green and red spectral bands and two infrared bands. Expectations for the scanner, whose scan mirror buzzed distressingly during testing as it whirred back and forth at 13 times per second, were low.

Among the concerns voiced before launch were fears that its moving parts would not work properly in space, but it was also unknown whether a scanner could produce high-quality digital imagery while careening around the planet at a speed of 14 orbits per day. After launch, the engineers at NASA and the scientists at the US Geological Survey (USGS)—who would manage the project once the satellite was in orbit—were shocked at the high fidelity of the data the chattering imager sent back, and it almost immediately became the vehicle’s primary imager.

The MSS sent back 300,000 images over its six-year lifespan and changed scientists’ approach to remote sensing, adding the dimension of time to analyses of Earth’s resources and surface covers. Now that researchers could access calibrated images of the same areas over the course of the seasons and years, attention moved away from merely building libraries of the spectral signatures of Earth’s features and toward monitoring changes and patterns over time.

Landsat 1 data were used to monitor water levels of Lake Okeechobee and build a better understanding of Miami’s local ecology and its water needs. Flood dynamics along the Mississippi River and Cooper’s Creek in Australia were studied for disaster assessment. Images from the MSS were proven effective for improving crop predictions in Kansas and were used to monitor clear-cutting of forests in Washington and evaluate compliance with timber harvest licenses.

However, as noted in the 1997 paper, “The Landsat Program: Its Origins, Evolution, and Impacts,” none of the early attempts at using satellite data to address global issues like food security, desertification trends, resource sustainability, and deforestation impacts led to more profound, worldwide applications. Gaps in time data, coarse imagery, and the need for more input from other systems hampered any comprehensive results. But as the paper’s authors explain, “It was believed with certainty that the data and imagery would have commercial, as well as public value” if more of it could be collected.

Early attempts at public-private partnerships to facilitate commercialization may have further hampered broader results. Operations of the fourth and fifth Landsat satellites were turned over to the private sector, leading to dramatic price increases, with the cost of a single Landsat image rising to several thousand dollars. Even after the government resumed control of the program in 1999, the government still charged several hundred dollars for a single image, making global analyses cost-prohibitive.

By then, the Thematic Mapper aboard Landsat 5 and the Enhanced Thematic Mapper-Plus aboard Landsat 7 satellite had become the primary Earth imagers, replacing the MSS sensor aboard Landsat 5 and the prior Landsat satellites. These second-generation Landsat sensors collect grayscale data across the visible spectrum at a 15-meter spatial resolution, as opposed to the first MSS’s 80-meter resolution, and also uses seven visible and infrared bandwidths for imaging and measuring temperature, at resolutions of 30 and 60 meters.

“Prior to 1999, when we launched Landsat 7, Landsat 4 and 5 had been operated by a private firm, and their cost per scene had gone up as high as $4,400, which very few people could afford,” says James Irons, Landsat Data Continuity Mission Project scientist at Goddard Space Flight Center, where the Landsat program has been housed since its inception. USGS agreed to reduce the cost to $600, but, Irons says, that was still prohibitive to anyone who wanted global data. He and colleagues within USGS continued the push to make it all publicly available for free.

“In 2008, they made the decision—which I refer to as ‘institutionally courageous’—to distribute those data at no cost to those requesting it,” he says.

The data crunchers at Mountain View, California’s Internet giant Google wasted little time in taking advantage of the new resource.

Technology Transfer

At the end of 2010, Google unveiled its Google Earth Engine, a cloud computing platform for accessing and processing Landsat images of the planet going back about 40 years. With the digitization of a warehouse of information, scientific study of worldwide trends using Landsat data suddenly became possible.

“Now you can ask questions on a global scale, over time, that have never been possible before,” says Rebecca Moore, engineering manager for the Google Earth Outreach program, a humanitarian arm of the Google Earth and Maps team.

Google is currently allowing total access to the mass of Landsat data, as well as its parallel processing platform for running algorithms on the mountain of information, to a limited group of science and research partners.

“We’ve got about a thousand scientists,” Moore says, adding that many are analyzing forest and land cover or water resources. “Conservation biologists are doing nice modeling, and in this case, they’re analyzing datasets like Landsat in combination with, for example, the [Jet Propulsion Laboratory’s] Shuttle Radar Topography Mission elevation data.”

In spring of 2013, in collaboration with Time magazine, Google Earth Engine released its Timelapse web feature, which uses Landsat imagery to allow users to watch a time-lapse animation of any land area on Earth— with the exception of those near the poles—running from 1984 to 2012.

To create this visual history of almost the entire planet, the company sifted through more than 2 million Landsat images to find the best representation of every individual pixel in the model, with each pixel representing an area of 900 square meters during one of 29 years. Under the pricing model set prior to 2008, the images Google used would have cost more than $1.2 billion.

The following year, Google also completely regenerated its Google Maps and Google Earth imagery, using 2012 data from the NASA-built Landsat 7 satellite and a similar pixel-picking technique in which the most common representation of each pixel was chosen from a set of many satellite images. “We ran it on 66,000 computers in parallel,” Moore says. “It was more than one million hours of computation, but we were able to have the results in a couple of days.” The resolution now used in those products is twice that of the Timelapse animation.

The company also partnered with a professor in the Department of Geographical Sciences at the University of Maryland to build a fine-grained model of global changes in forest cover between 2000 and 2012. Matthew Hansen is a leading scientist when it comes to analyzing Earth-observation data to classify forest cover and forest change, so the sudden availability of so much imaging data was a major boon to his work.

“We used to always say, ‘We use the data we can afford, not the data we need.’ But what kind of science is that?” he says. “Especially for NASA, which has a lot of Earth system science objectives related to global climate change, the carbon cycle—you name it. You have to have global observations to drive those models.”

Hansen’s work with monitoring deforestation began at the national level in central Africa, but he ran into problems over the Congo Basin, where cloud-free images are almost impossible to come by. That was when he hit on the pixel-by-pixel method Google used.

“That’s how we work with [NASA’s] Moderate- Resolution Imaging Spectroradiometer. It’s always best-pixel-possible, so we started to do that with Landsat,” he says. He went on to map forests in Europe, Russia, Indonesia, and Mexico.

“At some point, we felt comfortable that we could do the globe, and so that was the basis of the discussion with Google,” says Hansen. “We have the Landsat archive here too, on campus, but I think if we tried to create full-bore, global imagery, it would take us six months to a year, whereas for Google it would take a week.”

Benefits

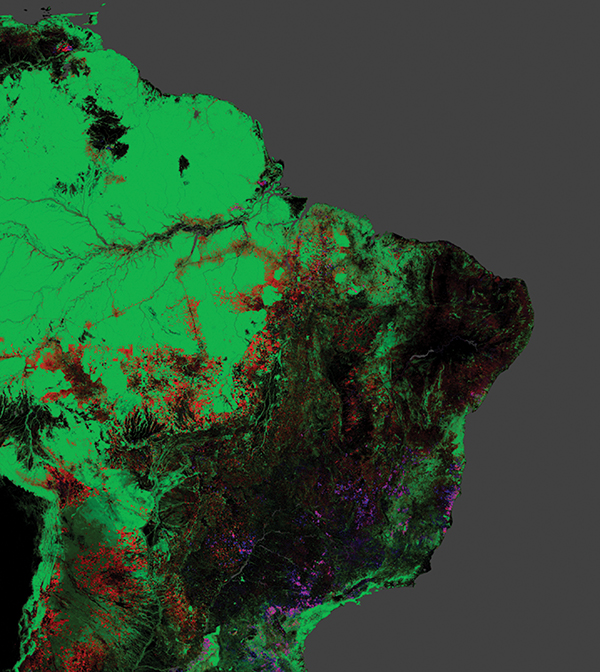

What Hansen and Google unveiled in late 2013, and what Google Earth Engine has posted on the front page of its website, is the first ever global study of forest cover: a map of the world accurate down to 30 meters, depicting current forests and gains and losses between 2000 and 2012, with layers of data for each year available for download.

Meanwhile, Moore says Google’s Timelapse feature has proven popular, with the animation drawing more than 3 million viewers in its first week alone.

“You can see amazing phenomena so clearly,” she says. “You can see Las Vegas growing wildly while nearby Lake Mead is shrinking. You can see the deforestation of the Amazon, the artificial islands sprouting off the coast of Dubai, the Columbia Glacier receding in Alaska.”

As users have explored the past and present world with the tool, online newspaper articles, blogs, and others have started posting links to different parts of the globe, Moore says. “And people have found interesting things that are not just gloom and doom,” she adds, noting that these include meandering rivers, formations of oxbow lakes, and the shifting of the Cape Cod Harbor shoreline.

She says, however, that all this early work is only a beginning. “I think we’re at the dawn of a new voyage of discovery, and it’s a digital voyage,” Moore says. “I think we’re going to learn things that have been going on across the planet back to the ’80s and ’70s and discover things that were sitting there waiting to be discovered in this treasure trove of data.”

She notes that the scientists Google is partnering with are also using current data to predict future events. Some found that they could predict an outbreak of cholera six weeks in advance by observing plankton blooms off the coast of Calcutta. Another was able to observe landscape greening, rainfall, and temperatures to predict Rift Valley fever outbreaks eight weeks out.

“The uses of Landsat data are really broad,” says Irons. “Urban expansion, glacial retreat, agricultural production, coral degradation, ecosystem change—wherever you can think of land cover and land use changing, Landsat data has been applied there. Disaster recovery, water resources management—the list just keeps going on.”

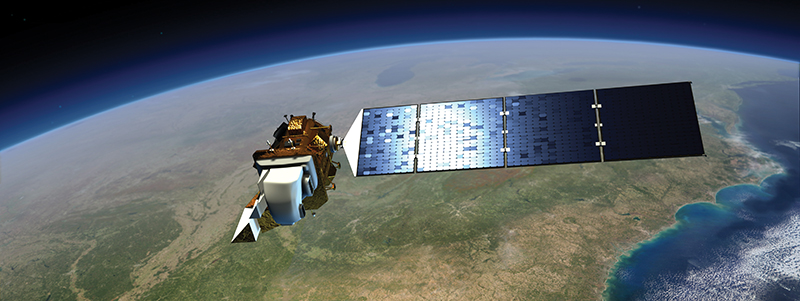

In May 2013 NASA launched the new Landsat 8 satellite, which Irons says has about the same spatial resolution as its predecessor but much higher performance in terms of signal-to-noise ratio. “That makes a big difference in your ability to recognize or to differentiate different kinds of land cover and subtleties within a land cover class, or to be more sensitive to change over time.”

Moore says Google Earth Engine is receiving information daily from the new satellite and that the data quality is high enough that there’s a possibility of including seasonal images in future additions to the time-lapse animation. At some point, she says, Google Earth Engine hopes to be able to produce a near-real-time report on the health of the planet: “All the best data streaming in from NASA satellites, not just the optical instruments but all sorts of scientific instruments—have that data coming in and make it available for science and for practical use.”

Irons says NASA and USGS have formed a study team to develop a plan for a coordinated, sustained land-imaging program for at least the next 20 years to ensure that there are no gaps in coverage. “Now we’re getting a full return on the investment of tax dollars that were spent to launch the satellite,” he says. “They’re distributing something like 3 million scenes per year, and people are beginning to develop the capacity to analyze large volumes of Landsat data.”

Having worked on the Landsat program for much of his 35-year career, Irons says he feels recent developments have brought that time, effort, and energy to fruition. “It’s been a very exciting few months following the Landsat 8 launch and seeing that the system’s working really well—and then to have people like Matt Hansen, Google, and others coming along and putting the data to work in such a productive way, it’s extremely gratifying.”

Google's fleet of cars and other vehicles outfitted with elaborate camera and laser mounts, above, to capture the street view of Earth.

A collaboration between Google and the University of Maryland used data from NASA-built Landsat satellites to create the first global study of Earth’s forest cover over time. This 2012 map depicting the Amazon rainforest shows existing forest cover in green, forest loss in red, forest gain in blue, and replaced forest in purple. The Amazon is the world’s richest and most diverse biological reservoir, has 20 percent of Earth’s fresh water, and has been losing thousands of square miles to logging, farming, and illegal road construction every year.

Illegal logging, such as this rosewood harvesting operation in Madagascar, and slash-and-burn farming practices are among the threats to planet’s most valuable rainforests. Troves of Landsat data are now allowing researchers to examine global trends in surface cover, such as gains and losses in forest cover. Image courtesy of Erik Patel, CC-BY-SA 3.0

The company also used data from the NASA-built Landsat 7 satellite to regenerate its image of the entire planet and also to cover the last dozen years or so of its time-lapse animation of the planet’s recent history.