Robots Spur Software That Lends a Hand

NASA Technology

Robonaut 2 flew aboard Space Shuttle Discovery to the International Space Station (ISS) in early 2011, but its development has been many years in the making. In 1997, Johnson Space Center engineers embarked on a mission to build a robot that could perform the same tasks as humans so it could assist astronauts in space.

In collaboration with the Defense Advanced Research Projects Agency (DARPA), Johnson aimed to build Robonaut 1—a humanoid robot complete with dexterous manipulation, or a capability to use its hands—to work alongside its human counterparts. In order to lend a helping hand, Robonaut needed to be able to use the same tools as astronauts to service space flight hardware, which was designed for human servicing.

Robert Ambrose, an automation and robotics engineer at Johnson, says the majority of research at the time on robotics autonomy (the ability to be self-governing) was focused on mobile robots that could avoid obstacles and drive over rough terrain. “There was a blind spot of sorts concerning manipulation,” he says. “What had not yet been addressed was using a robot’s arms and hands to interact with the world.”

In addition, artificial intelligence—which uses computers to perform tasks that usually require human intelligence—was lacking for robots to be able to respond to unanticipated changes in their environment. This was a particular concern for Robonaut, since there can be delays or even failures in the communication between mission control and the ISS. Without commands, the robot would become useless.

Technology Transfer

In 2001, Johnson started seeking software for Robonaut 1 that could deliver automatic intelligence and learning. In cooperation with DARPA, the Center began working with Vanderbilt University, the University of Massachusetts, the Massachusetts Institute of Technology, and the University of Southern California. “We were looking for some simpler ways to teach robots and let them learn and internalize lessons on their own. We had some interesting approaches that we tested out using Robonaut 1,” says Ambrose.

One of the approaches was from Dr. Richard Alan Peters, a professor at Vanderbilt, who was researching how mammals learn in order to write a program for robots to learn. He observed that people use their senses to acquire information about their environment and then take certain actions. He found this process critical to the formation of knowledge in the brain. After identifying some common patterns, he incorporated them into learning algorithms that could be used with a robot.

“We found there might be some really good approaches for teaching robots and letting them learn and develop capabilities on their own, rather than having to hard program everything,” says Ambrose.

Previous generations of artificial intelligence required pre- or hard programming of rules in order for the robot to determine how to respond. All of the objects in an environment had to be labeled and classified before the robot could decide how to treat them. Peters aimed for software that could support robot autonomy by enabling it to sense a new object, determine its attributes, and decide how best to handle it.

Under a Cooperative Agreement, testing on Robonaut 1 demonstrated Peters’ algorithms were able to produce learned knowledge from sensory and motor control interactions—just like mammals, but without having a program written to tell it what to do.

By 2006, the group of partners developed the first robotic assistant prototype, Robonaut 1, and Ambrose says the results significantly informed the mechanical and electrical design of the second generation, or Robonaut 2. “We have several students who came from that collaboration who now work on Robonaut 2. They are doing things we could barely imagine back in the early 2000s. They took it to a new level and gave the robot the ability to reason about how to handle and interact with objects and tools. It is now running on Robonaut 2 in space.”

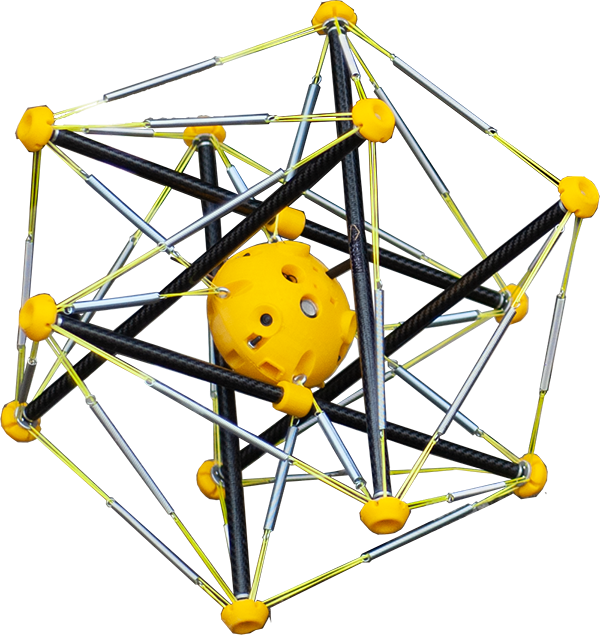

For Peters, the work led to several patents related to robotics intelligence. Now, he serves as the chief technology officer at Universal Robotics, a software engineering company in Nashville, Tennessee, where the NASA-derived technology is available in a product called Neocortex.

Benefits

According to Hob Wubbena, vice president of Universal Robotics, Neocortex mimics the way people learn through the process of acting, sensing, and reacting. Just like the part of the brain that Neocortex is named after, the software provides insight on the data or processes acquired through sensing. This sensing data is captured through actual sensors in cameras, lasers, or other means.

Because Neocortex requires observation and interaction with the physical world, Universal Robotics created Spatial Vision to provide sight to see its surroundings. From its interactions with what it sees, Neocortex discovers what to do in order to be successful. A third product, Autonomy, is like the muscle control for Neocortex, and provides fast, complex motion for a robot.

According to the company, its ability to allow machines to adapt and react to variables and learn from experiences opens up process improvement opportunities. Neocortex provides a new option in places where automation can impact efficiency and worker safety, such as in warehousing, mining, handling hazardous waste, and vehicle use such as forklifts. The more Neocortex interacts with its environment, the smarter it becomes.

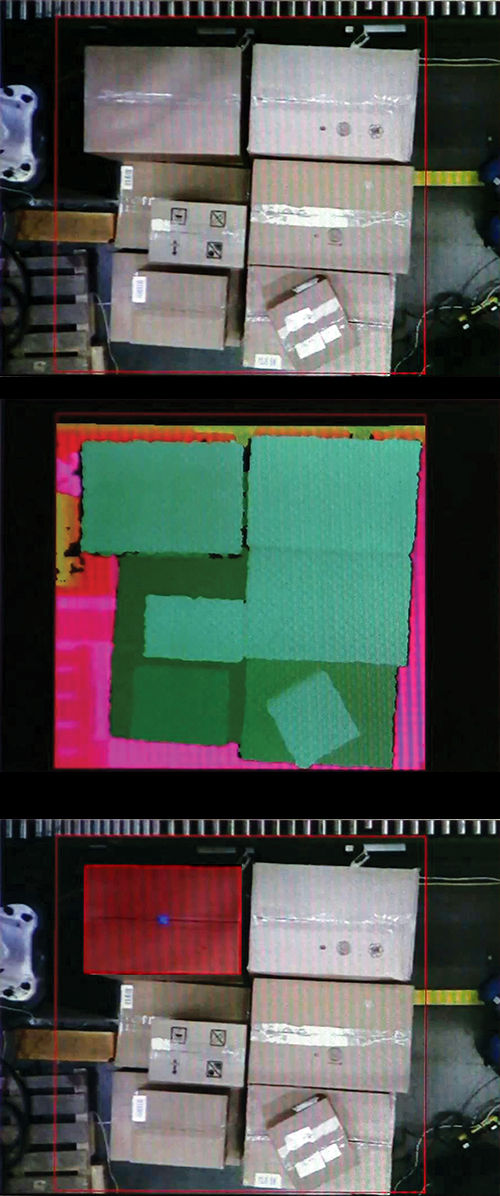

A popular logistics application of Neocortex is for placing, stacking, or removing boxes from pallets or trailers. The system can analyze factors such as shape and orientation to determine the best way to grasp, lift, move, and set down the items, as well as the fastest way to unload, all while compensating for weight, orientation, and even crushed or wet containers.

Wubbena says the product can improve productivity by providing information that an employee cannot necessarily perceive, such as load balancing during the stacking of items in a trailer. Also, because these tasks require repetitive lifting and twisting, the technology from Universal Robotics could help reduce the number of occupational injuries that occur. “Through the intelligence, new areas can be automated which contribute to safety as well as overall process efficiency,” says Wubbena.

Working with Fortune 500 companies, Universal Robotics’ technology is currently being installed to robotically stack and package frozen meat as well as to provide 3D vision guidance for automatically handling a variety of unusually-shaped bottles. The company has also worked on prototypes for automated random bin picking of metallic objects like automotive parts, as well as deformable objects like bulk rubber.

Not all applications of Universal Robotics’ technology, however, employ a robot. For example, the software can help workers make faster decisions by recognizing and reading bar codes on packages and then projecting a number on each box to tell the handler where the package should go. Additionally, Wubbena says Neocortex has been tested for a significantly different application: analyzing electrocardiogram data to see predictive patterns in heart attack victims.

While the innovations from Robonaut 1 are in the process of improving life on the ISS, they are already benefiting life on Earth. “It’s really a tribute to NASA that a partnership was created with such a significant impact for the space program as well as business,” says Wubbena. “As we continue to help companies improve efficiency, quality and employee safety, we are proud to have worked with NASA to develop technology that helps the US maintain its competitive technical edge.”

Universal Robotics™, Neocortex™, and Spatial Vision™ are trademarks of Universal Robotics.

This commercial robot is using software developed in partnership with NASA to identify boxes and move them from a pallet to a conveyer belt.

The top image shows the robot camera point of view, the middle image shows the 3D depth map, and the bottom image shows the view when the robot locates its next pick.

Robonaut 2 is checked before being packaged for launch to the International Space Station. NASA worked with partners to develop the ability for its humanoid robot to reason and interact with objects.